Why it issues: In his GTC 2023 keynote, Nvidia CEO Jensen Huang highlighted a brand new era of breakthroughs geared toward bringing AI to each trade. Nvidia has partnered with tech giants like Google, Microsoft and Oracle to make advances in AI coaching, deployment, semiconductors, software program libraries, methods and cloud providers. Other partnerships and developments introduced embrace firms akin to Adobe, AT&T and automaker BYD.

Huang cited many examples from the Nvidia ecosystem, together with Microsoft 365 and Azure customers accessing platforms for constructing digital worlds, and Amazon utilizing simulation capabilities to coach autonomous warehouse robots. He additionally cited the meteoric rise of generative AI providers akin to ChatGPT, and referred to as its success “the iPhone second of AI.”

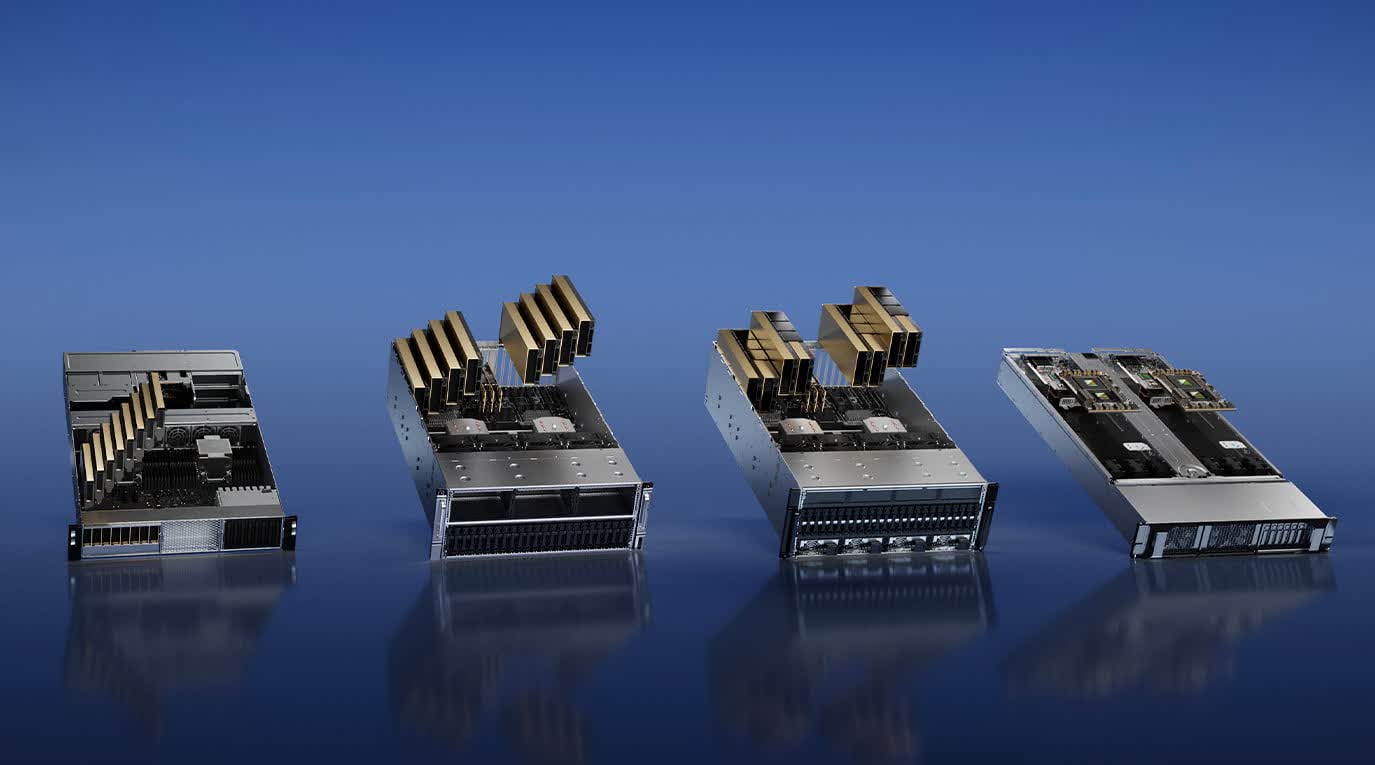

Based on Nvidia’s Hopper structure, Huang introduced a brand new H100 NVL GPU in an NVLink dual-GPU configuration to satisfy the rising demand for AI and enormous language mannequin (LLM) inference. The GPU has a Transformer Engine particularly designed to deal with fashions like GPT, decreasing the price of LLM processing. The firm claims {that a} server with 4 pairs of H100 NVLs will be as much as 10 occasions quicker than an HGX A100 for GPT-3 processing.

As cloud computing turns into a $1 trillion trade, Nvidia developed the Arm-based Grace CPU for synthetic intelligence and cloud workloads. The firm claims 2x the efficiency of x86 processors throughout the similar energy envelope for main knowledge heart purposes. Then, the Grace Hopper superchip combines the Grace CPU and Hopper GPU to course of the large datasets generally present in AI databases and enormous language fashions.

Additionally, Nvidia’s CEO claimed that their DGX H100 platform, that includes eight Nvidia H100 GPUs, has turn out to be a blueprint for constructing AI infrastructure. Several main cloud suppliers, together with Oracle Cloud, AWS, and Microsoft Azure, have introduced plans to undertake the H100 GPU of their merchandise. Server makers akin to Dell, Cisco, and Lenovo are additionally making methods powered by Nvidia’s H100 GPU.

Clearly, generative AI fashions are all the craze, and Nvidia is providing new {hardware} merchandise with particular use instances to run inference platforms extra effectively. The new L4 Tensor Core GPU is a video-optimized general-purpose accelerator that delivers 120x quicker efficiency for AI-driven video and 99% higher vitality effectivity than CPUs, whereas the L40 for picture era targets graphics and AI-enabled 2D optimized, video and 3D picture era.

Also learn: Has Nvidia received the AI coaching market?

Nvidia’s Omniverse can also be current within the modernization of the auto trade. By 2030, the trade will mark a shift to electrical autos, new factories and battery gigafactories. Nvidia says Omniverse is being utilized by main automotive manufacturers for quite a lot of duties: Lotus makes use of it for digital welding station meeting, Mercedes-Benz makes use of it for meeting line planning and optimization, and Lucid Motors makes use of it to construct digital store. BMW is partnering with idealworks for manufacturing unit robotic coaching and plans to construct an electrical automotive manufacturing unit totally within the Omniverse.

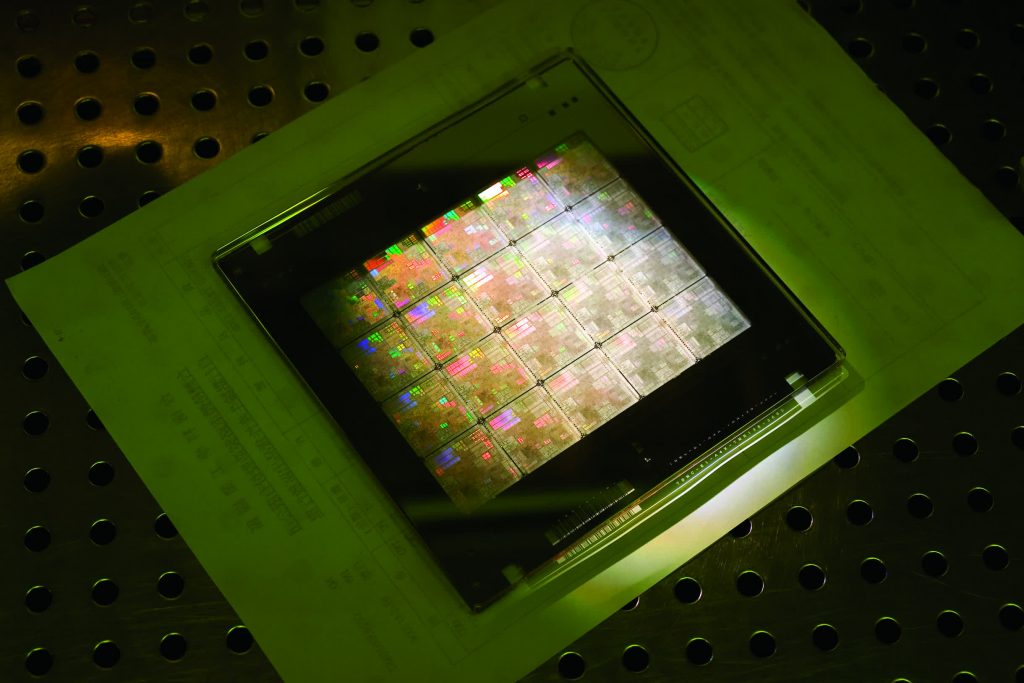

All in all, there are too many bulletins and partnerships to say, however arguably the final large milestone comes on the manufacturing aspect. Nvidia introduced a breakthrough in chip manufacturing velocity and vitality effectivity with the launch of the “cuLitho” software program library designed to speed up computational lithography by as much as 40 occasions.

Jensen defined that cuLitho can dramatically cut back the quantity of computation and knowledge processing required in chip design and manufacturing. This will considerably cut back energy and useful resource consumption. TSMC and semiconductor gear provider ASML plan to include cuLitho into their manufacturing processes.