[ad_1]

Why it issues: Just sneaking in earlier than the top of Q1 2022, Intel are lastly detailing their first Arc discrete GPUs, as promised. We’ve bought all the small print, together with specs on the GPU dies themselves, the varied product configurations that Intel are asserting first, and a number of the unique options Intel shall be bringing to their graphics household.

As anticipated, the primary Intel Arc GPUs are destined for laptops, which is just a little completely different to what AMD and Nvidia normally do, which is to launch desktop merchandise first. However, Arc desktop playing cards are nonetheless coming. Intel is saying Q2 2022 for these merchandise and a few of what we’re immediately applies to these playing cards, such because the GPU die particulars. Intel is performing some type of small teaser for desktop add-in playing cards immediately, which we’ll be overlaying in our common protection.

Intel is getting ready three tiers of Arc GPUs, with an identical naming scheme as we see in CPUs: Arc 3 is on the low finish, this shall be fundamental discrete graphics with considerably increased efficiency than built-in; Arc 5 as a mainstream possibility equal to an RTX 3060 kind of place out there; after which Arc 7 for Intel’s highest performing components.

We’re not anticipating Intel’s flagship GPU to essentially compete with the quickest GPUs from AMD or Nvidia immediately (RX 6800 and RTX 3080 tiers and above), so Arc 7 might be going to high out round a mid- to high-end GPU out there proper now.

The first GPUs to hit the market shall be Arc 3, coming in April, with Arc 5 and Arc 7 coming in the summertime of 2022. It’s trying like an “finish of Q2” timeframe, after we’ll seemingly additionally see desktop merchandise.

Let’s dive straight into the product specs, and I’ll begin with the GPU dies. The two chips right here aren’t the GPU SKUs we’ll see in laptops, fairly the precise die specs that Intel is making within the Alchemist collection.

The bigger die is known as ACM-G10, this is sort of a GA104 or Navi 22 equal from AMD and Nvidia, respectively. The smaller die is ACM-G11 which is nearer in dimension to GA107 and Navi 24. Both are fabricated on TSMC’s N6 node as has been beforehand introduced.

ACM-G10 options 32 Xe Cores and 32 ray tracing cores. If you are extra conversant in Intel’s older “execution unit” measurement for his or her GPUs, 32 Xe cores is the equal of 512 execution items with every Xe core containing 16 vector engines for traditional shader work and 16 matrix engines primarily for machine studying work. Intel combines 4 Xe Cores right into a render slice, so the highest ACM-G10 configuration has 8 render slices.

We’re additionally seeing 16 MB of L2 cache within the spec sheet, and a 256-bit GDDR6 reminiscence subsystem. We’re getting PCIe 4.0 x16 with this die, 2 media engines and a 4-pipe show engine so primarily supporting 4 outputs.

As for die dimension specs, Intel informed us this bigger variant is 406 sq.mm and 21.7 billion transistors. Size-wise, that is bigger than AMD’s Navi 22, which is 335 sq.mm and 17.2 billion transistors. It finally ends up extra round Nvidia’s GA104, which is 393 sq.mm however much less dense on Samsung’s 8nm at 17.4 billion transistors.

That ought to offer you tough expectations right here, the scale and sophistication of Intel’s largest GPU die is much like the higher mid-tier dies from their opponents, which go into merchandise just like the RTX 3070 Ti and RX 6700 XT. Intel don’t have a 500 sq.mm plus die this technology to compete with the most important GPUs from AMD and Nvidia.

The smaller die is ACM-G11 and that has 8 Xe cores and eight ray tracing items, so that is 128 execution items or simply 2 render slices. The L2 cache is lower all the way down to 4 MB in consequence, and the reminiscence subsystem finally ends up as 96-bit GDDR6. However, whereas this GPU has only a quarter of the Xe cores because the bigger die, we’ll nonetheless be getting 2 media engines and 4 show pipelines which shall be very helpful for content material creators. It additionally incorporates a PCIe 4.0 x8 interface, Intel didn’t make the error of dropping this to x4 like AMD did with their entry-level GPUs.

As for die dimension Intel is quoting 157 sq.mm and seven.2 billion transistors. This sits just about between AMD’s Navi 24 and Nvidia’s GA107. Navi 24 is tiny at simply 107 sq.mm and 5.4 billion transistors. Nvidia hasn’t spoken formally about GA107 however we have measured it to be roughly 200 sq.mm in laptop computer type components.

In solely together with 8 Xe cores with the ACM-G11 design, this is not that a lot bigger than the built-in GPU Intel contains with Twelfth-gen Alder Lake CPUs. Those high out at 96 execution items, making ACM-G11 simply 33% bigger, so that is firmly an entry-level GPU. However, ACM-G11 does profit from options like GDDR6 reminiscence and ray tracing cores, there are extra avenues for efficiency uplifts over built-in graphics than simply having extra cores.

As for finish merchandise that buyers shall be shopping for, there are 5 SKUs in complete that use these two dies: the 2 Arc 3 merchandise use ACM-G11, whereas the three GPUs break up throughout Arc 5 and seven will use ACM-G10. So when Intel says Arc 3 is launching now, that is clearly a launch for the ACM-G11 die whereas the bigger ACM-G10 has to attend.

In the Arc 3 collection, we’ve an 8 core possibility (the A370M) and a 6 core possibility (the A350M), each with 4GB of GDDR6. Interestingly, regardless of Intel simply quoting a 96-bit reminiscence bus for ACM-G11, that is being lower down for these merchandise to simply 64-bit to raised align with 4GB of capability. Were Intel to stay to 96-bit, these GPUs would have had to decide on between 6 GB or 3 GB of reminiscence in an ordinary configuration.

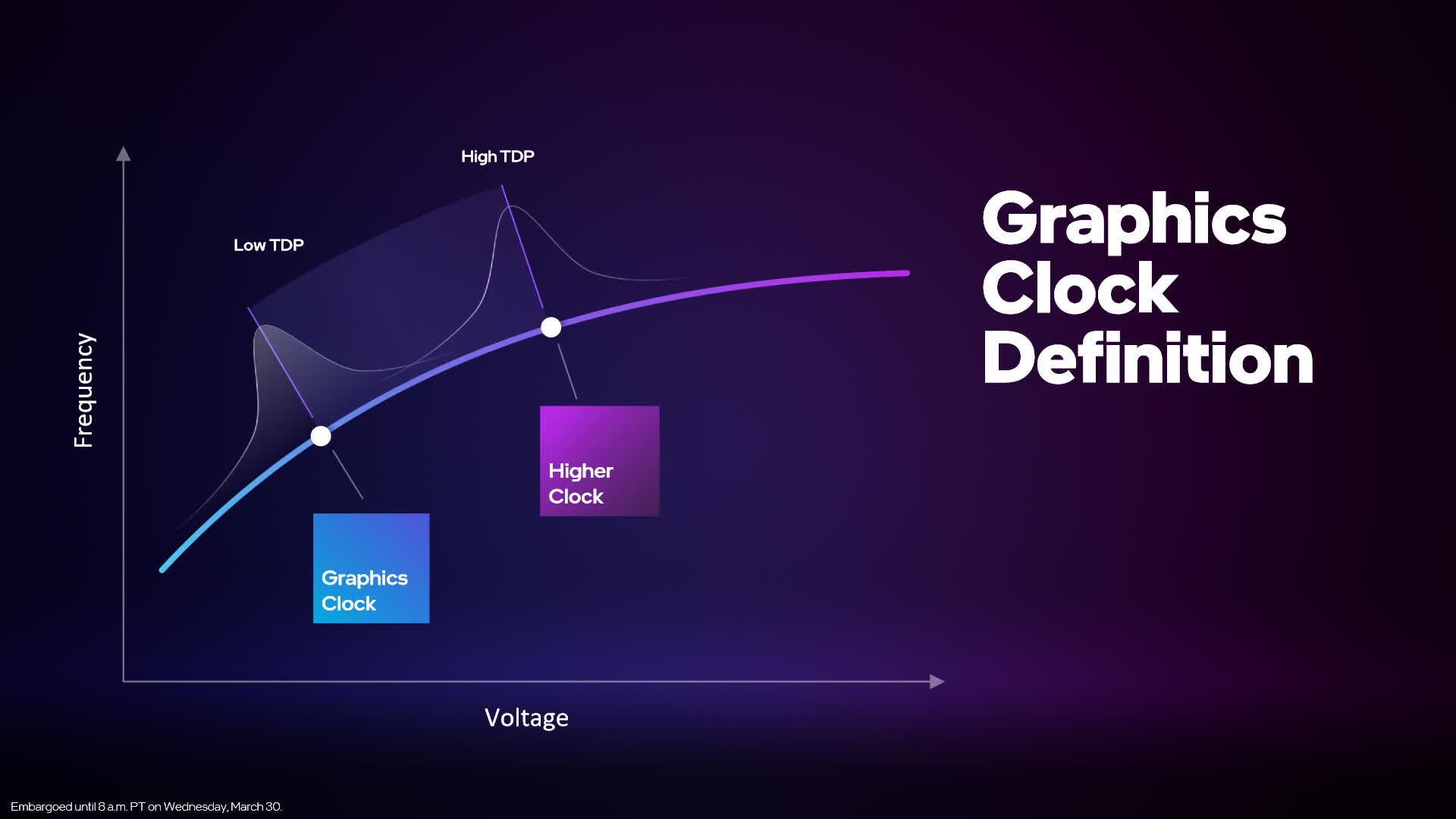

When it involves clock speeds, the A370M is listed at 1550 MHz for its “graphics clock” whereas the A350M is at 1150 MHz. What is a graphics clock? Well that is similar to AMD’s recreation clock definition. Intel is saying the graphics clock is not the utmost frequency the GPU can run at, fairly the everyday common frequency you will see throughout a variety of workloads. Specifically for these cellular merchandise, the graphics clock listed on the spec sheet is in relation to the bottom TDP configuration Intel presents. So for the A370M with a 35-50W energy vary, the 1550 MHz clock is what you will usually see at 35W, with 50W giving customers a better frequency. Intel is particularly being conservative right here to keep away from deceptive clients: the clock pace is sort of a minimal specification.

Arc 5 with the A550M makes use of half of an ACM-G10 die, lower all the way down to 16 Xe cores and a 128-bit GDDR6 reminiscence bus supporting 8GB of VRAM. With a 60W TDP it will run at a 900 MHz graphics clock, which is kind of low, however there can even be as much as 80W configurations.

Then for Arc 7, we get the full-spec configurations for top-tier gaming laptops. The A770M contains all the ACM-G10 die with 32 Xe cores, 16GB of GDDR6 on a 256-bit bus, and a graphics clock of 1650 MHz at 120W. Intel says that some configurations of Arc can run on the 2 GHz mark or increased, so that is seemingly what we’ll see for the 150W upper-tier variants. It’s additionally good to see Intel being beneficiant with the reminiscence; 16GB actually is what we needs to be getting for these higher-tier GPUs and that is what Intel is offering – anticipate related for desktop playing cards.

The A730M is a lower down ACM-G10 with 24 Xe cores and a 192-bit reminiscence bus supporting 12GB of GDDR6. Its graphics clock is 1100 MHz with an 80-120W energy vary. So between all of those merchandise, Intel is overlaying all the standard laptop computer energy choices from 25W proper by way of to 120W.

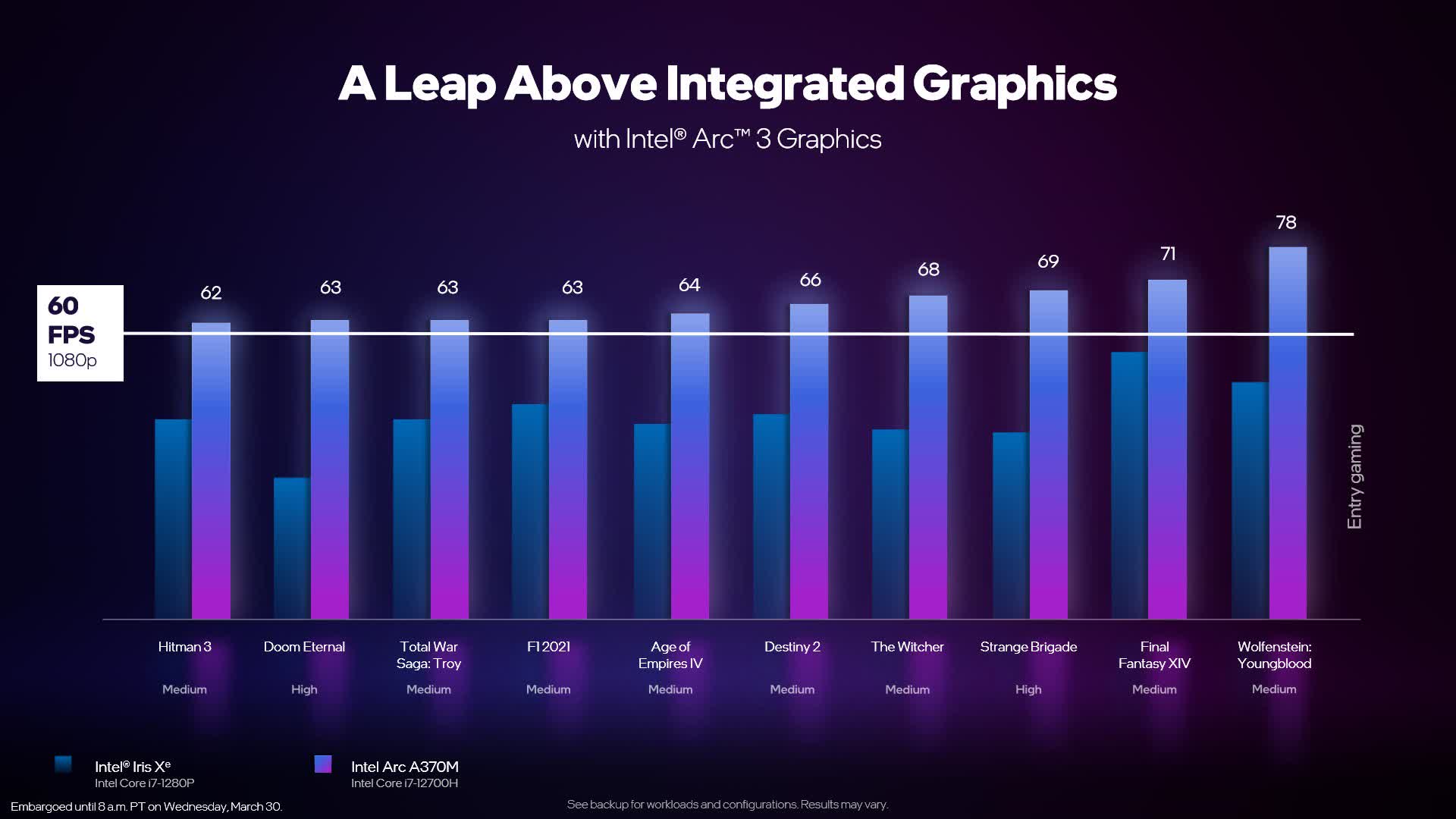

As Intel is usually specializing in the Arc 3 launch for now, we did get a number of efficiency benchmarks evaluating the A370M in a system with Intel’s Core i7-12700H to the built-in 96 execution unit Xe GPU within the Core i7-1280P. The Arc A370M is just not precisely setting a excessive efficiency commonplace with Intel concentrating on simply 1080p 60FPS utilizing medium- to high-quality settings, nonetheless this can be a very fundamental low-power discrete GPU possibility for mainstream skinny and light-weight laptops. Depending on the sport we seem like getting between 25 and 50 p.c extra efficiency than Intel’s finest iGPU possibility, though Intel did not specify what energy configuration we’re for the A370M.

However, I do have query marks over whether or not the A370M shall be sooner than the brand new Radeon 680M built-in GPU AMD presents of their Ryzen 6000 APUs. We know this iGPU as configured within the Ryzen 9 6900HS is roughly 35% sooner than the 96 execution unit iGPU within the Core i9-12900HK, so it is fairly doable that the A370M and Radeon 680M will commerce blows. This would not be a very superb final result for Intel’s smaller Arc Alchemist die, so we’ll must hope that it does outperform the present finest iGPU possibility after we get round to benchmarking it. Intel did not provide any efficiency estimates for a way their merchandise examine to both AMD or Nvidia choices, and normally Intel does not thoughts evaluating their stuff to their opponents, so this can be a little bit of a purple flag.

Alright, let’s now breeze by way of a number of the options Arc GPUs shall be providing. The first is that the Xe cores themselves are capable of run floating level, integer and XMX directions concurrently. The vector engines themselves have separate FP and INT items, so with fashionable GPUs you’d anticipate concurrent utilization and that is doable right here consistent with different architectures.

The media engine seems to be extraordinarily highly effective. These Arc GPUs are the primary to supply AV1 {hardware} encoding acceleration, so this is not simply decoding like AMD and Nvidia’s newest GPUs provide, that is full encoding help as nicely. We additionally get the standard help for H.264 and HEVC with as much as 8K 12-bit decodes and 8K 10-bit encodes.

AV1 encoding help is big for shifting ahead the AV1 ecosystem, particularly for content material creators that may wish to leverage the upper coding effectivity AV1 presents over older codecs. However, Intel’s demo right here was a bit weird, exhibiting AV1 for recreation streaming functions. It’s all nicely and good that Arc GPUs can stream Elden Ring in an AV1 codec, however this is not truly helpful in observe proper now, as main streaming providers like Twitch and YouTube do not help AV1 ingest. In reality, Twitch proper now does not even help HEVC, so I would not maintain your breath for AV1 help any time quickly. Arc GPUs supporting this shall be far more helpful for creator productiveness workloads for now.

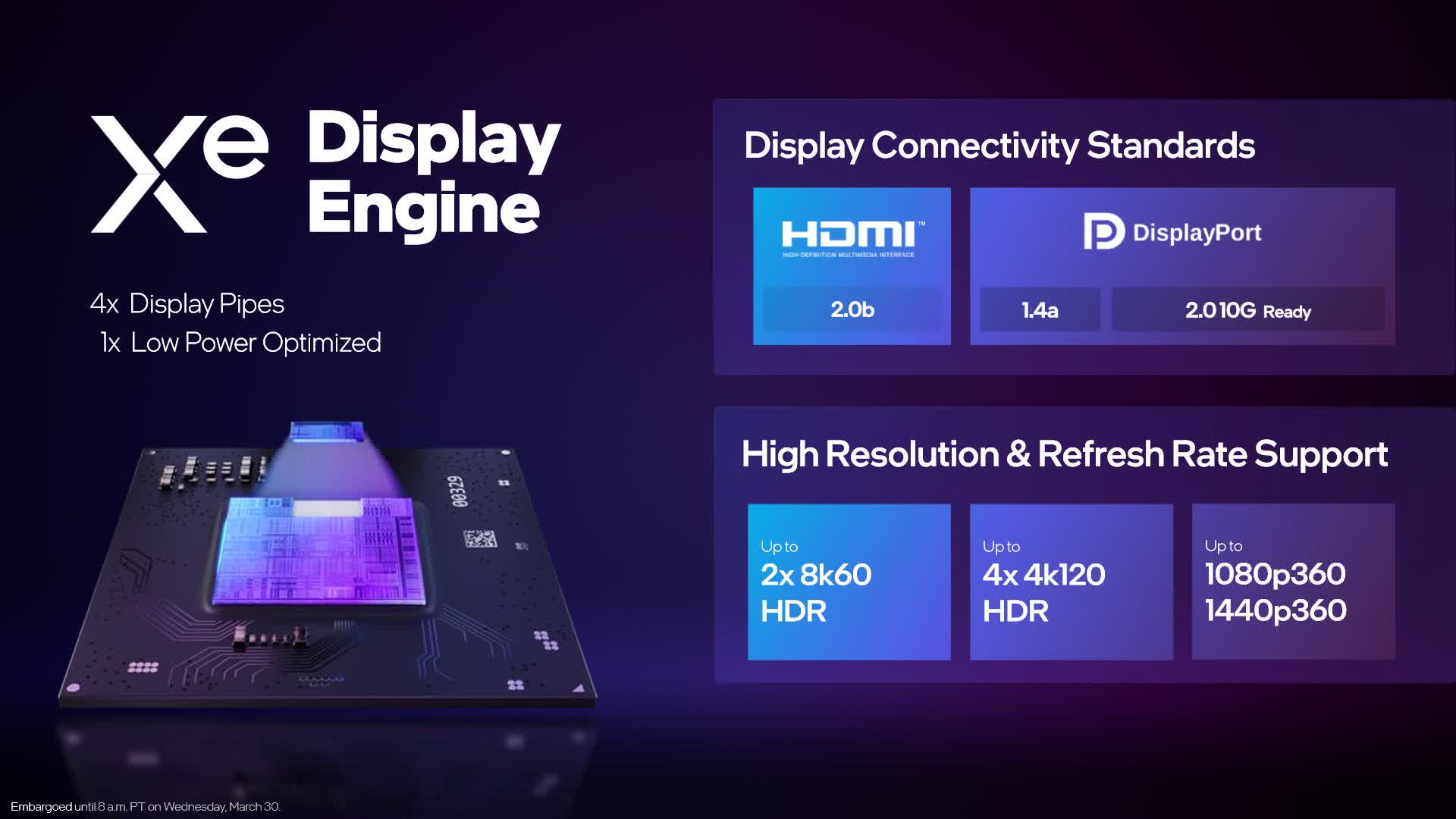

While the media engine is trying nice, the show engine… not a lot. Intel is supporting DisplayPort 1.4a and HDMI 2.0b right here, and claiming it is DisplayPort 2.0 10G prepared. However, there isn’t any help for HDMI 2.1, which is a reasonably ridiculous omission given the present state of GPUs. Not solely has the HDMI 2.1 specification been out there since late 2017, it has been built-in into each AMD and Nvidia GPUs since 2020, with all kinds of shows now together with HDMI 2.1. Using last-generation HDMI is a horrible misstep and hurts compatibility with TVs particularly that normally do not provide DisplayPort.

Intel’s answer for OEMs desirous to combine HDMI 2.1 is constructing in a DisplayPort to HDMI 2.1 converter utilizing an exterior chip, however that is hardly a perfect answer, particularly for laptops which might be constrained on dimension and energy. Intel did not wish to elaborate an excessive amount of on the HDMI 2.1 problem so I’m nonetheless undecided whether or not this solely applies to sure merchandise however I’d be fairly upset if their desktop Arc merchandise do not help HDMI 2.1 natively and it isn’t trying good in that space.

Another disappointing revelation from Intel’s media convention was in relation to XeSS. Intel is exhibiting help for 14 XeSS titles when the know-how launches with Arc 5 and seven GPUs this summer season, nonetheless there is a catch right here: the primary implementation of XeSS will solely help Intel’s XMX directions and due to this fact be unique to Intel GPUs. As a refresher, XMX are Intel’s Xe Matrix Extensions, principally an equal to Nvidia’s Tensor operations, that are vendor unique and vendor optimized. As XMX is designed for Arc to be run on their XMX cores, it solely runs on Arc GPUs.

XeSS will finally help different GPUs by way of a separate pipeline, the DP4a pipeline which is able to work on GPUs supporting Shader Model 6.4 and above, in order that’s Nvidia Pascal and newer, plus AMD RDNA and newer. However, Intel talked about in a tidbit that the DP4a model won’t be out there concurrently the XMX model, with the preliminary focus going in the direction of XeSS by way of XMX on Intel GPUs. This is regardless of Intel beforehand saying XeSS makes use of a single API with one library that then has two paths inside for every model relying on the {hardware}. It appears that whereas this can be the aim finally, probably a second iteration of XeSS or an replace down the road, the preliminary XeSS implementation is XMX solely.

This is not excellent news for XeSS and will make the know-how lifeless on arrival. Around the time XeSS is meant to launch, AMD shall be releasing FidelityFX Super Resolution 2.0, which is a temporal upscaling answer that can work on all GPUs at launch. I do not see the inducement for builders to combine XeSS into their video games if it solely works on Arc GPUs, which is able to solely be a miniscule fraction of the overall GPU market, particularly if they might use FSR 2.0 as an alternative. Intel cannot afford to go down the DLSS path with exclusivity, that works for Nvidia as they’ve a dominant market share and builders integrating DLSS know they’ll a minimum of goal a big proportion of consumers. Intel does not have that within the GPU market but and will not any time quickly, so releasing the model of XeSS that works on competitor GPUs is essential for adoption of that know-how.

One know-how Intel talked about did catch my eye although, and that is clean sync. This is a know-how constructed into the show engine that may blur the boundary between two frames if you’re enjoying in a Vsync-off configuration. This is usually meant for fundamental fastened refresh price shows the place you continue to wish to recreation at a excessive body price above the monitor’s refresh price for latency advantages. Intel says this solely provides 0.5ms of latency for a 1080p body. Unfortunately, the demo picture right here is simulated, however I’ll be eager to take a look at how efficient that is in observe.

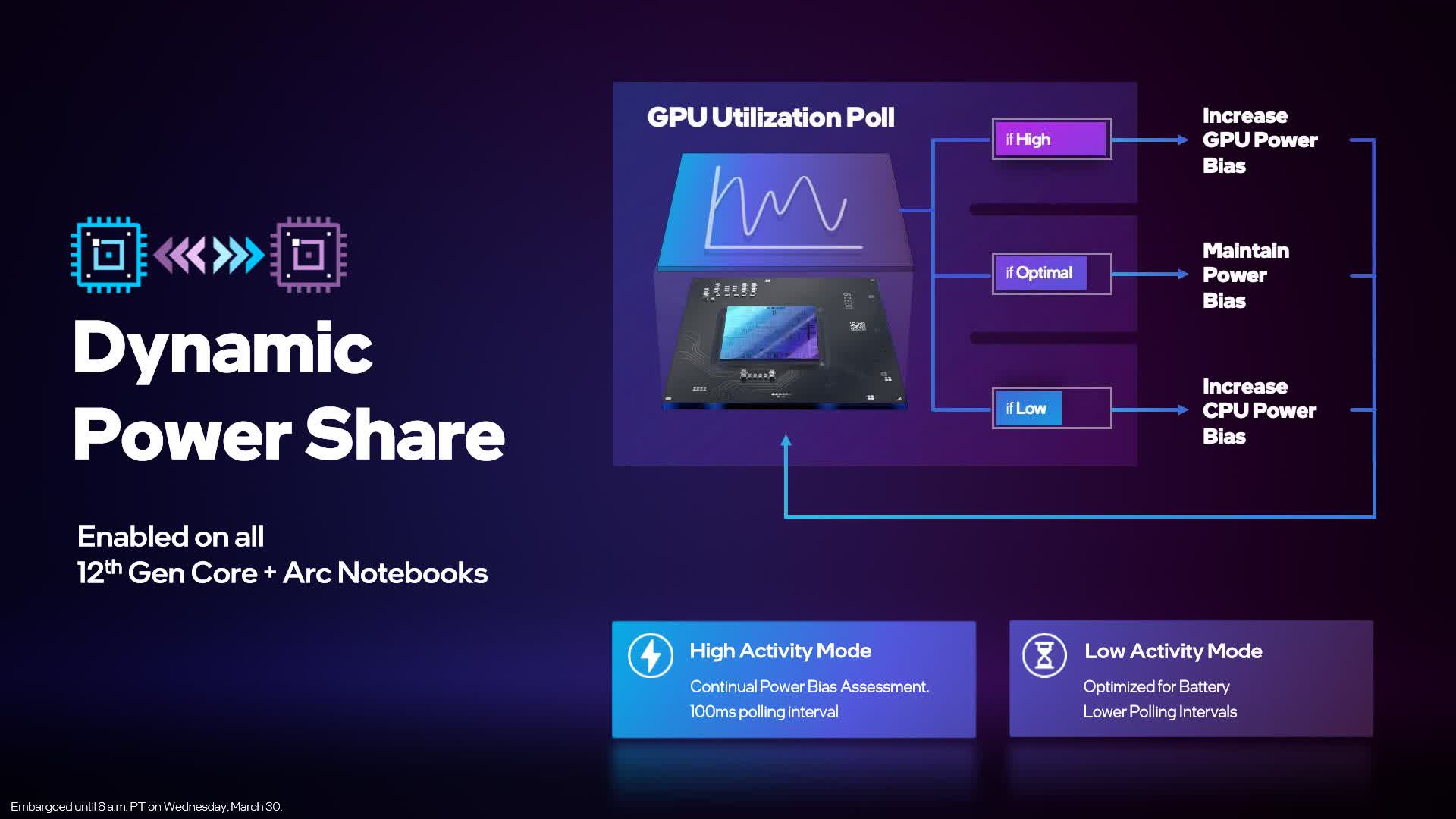

Intel additionally introduced a know-how referred to as Dynamic Power Share, which is basically a duplicate of AMD’s SmartShift and Nvidia’s Dynamic Boost designed to work with laptops which have Intel CPUs and Intel GPUs. As we have seen from SmartShift and Dynamic Boost, these applied sciences stability the overall energy funds of a laptop computer between the CPU and GPU relying on the calls for of the workload, and that is precisely what Dynamic Power Share brings.

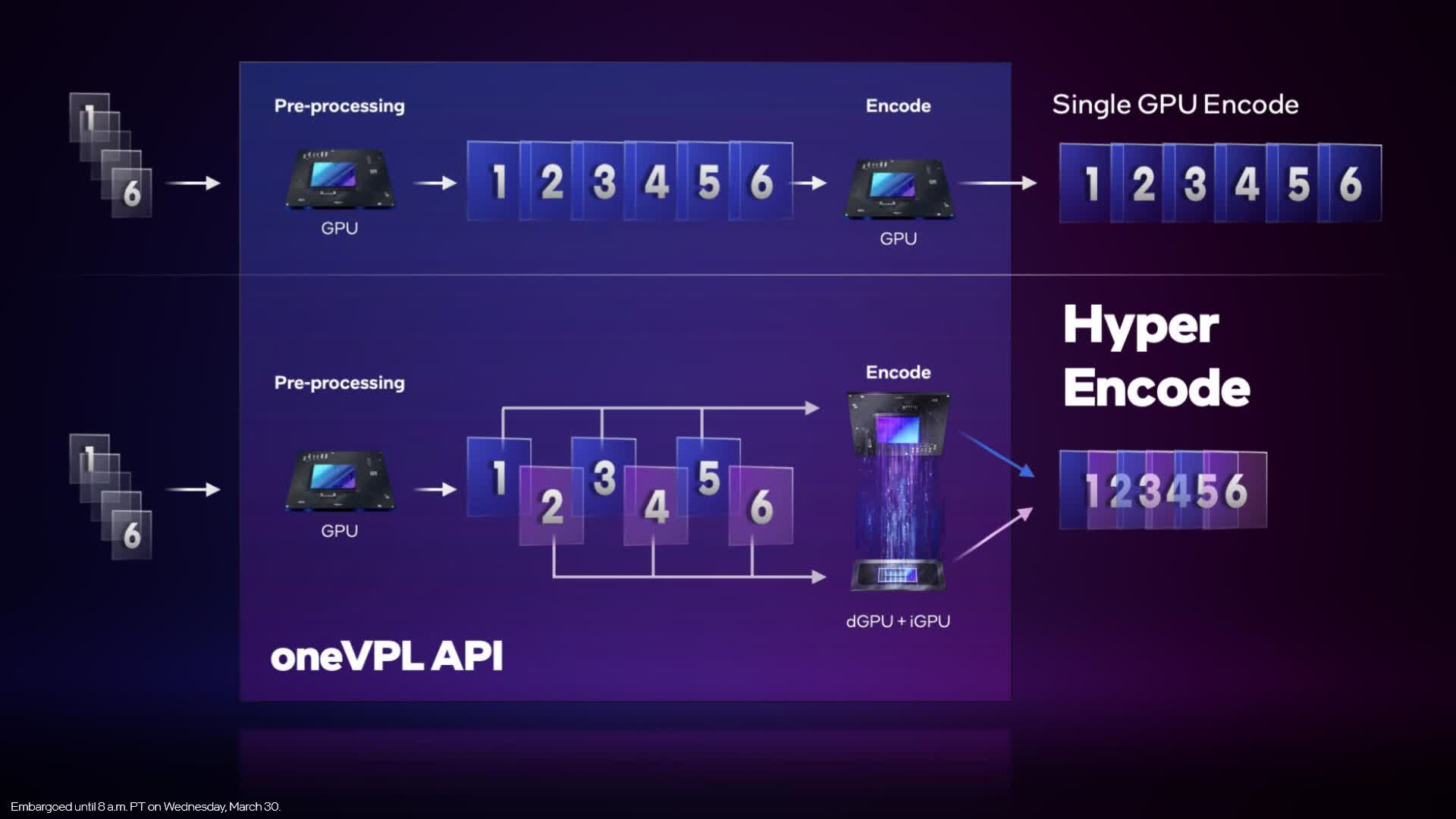

Then we’ve two different applied sciences, one referred to as Hyper Encode which is ready to mix the media encoding engine on an Intel CPU with the encoding engine on the GPU for elevated efficiency. In supported purposes utilizing the oneVPL API, this primarily splits up the encoding course of between the 2 engines earlier than stitching them again collectively. Intel says this may present as much as a 60% efficiency uplift over utilizing one encoding engine. And there is a related tech for compute referred to as Hyper Compute which presents as much as 24% extra efficiency.

Lastly, Intel confirmed off their new management middle as a part of their driver suite referred to as Arc Control, which shall be out there for all of their GPU merchandise. Intel’s driver suite did want a little bit of an overhaul and is a key level of competition for patrons an Arc GPU vs. Nvidia or AMD. Arc Control is about to a minimum of enhance their interface in providing options like efficiency metrics and tuning, creator options like background removing for webcams, inbuilt driver replace help and naturally all the standard settings. And not like GeForce Experience, it will not require a person account or login.

The greater concern for Intel is extra driver optimization within the video games themselves and there is nothing actually that Intel can say right here to fulfill patrons till reviewers can see how these merchandise carry out throughout a variety of video games. Intel say they are going to be offering day-1 driver updates in step with what Nvidia and AMD have been doing and have been placing plenty of work into developer relations, however this can be a mammoth process for a brand new GPU vendor and one thing each AMD and Nvidia have needed to construct up over time, so it should undoubtedly be fascinating to see the place all of it lands when Arc GPUs can be found to check.

And that is just about it for Intel’s Arc GPU announcement. Bit of a blended bag if I’m trustworthy right here, there are undoubtedly some optimistic factors and issues to stay up for, but in addition some disappointments across the applied sciences and options. With Intel solely able to launch Arc 3 collection GPUs at this level fairly than the extra highly effective Arc 5 and seven collection. which seem a minimum of 3 months away, in some respects it seems like this launch was principally about Intel fulfilling their promise of a Q1 2022 launch for Arc – I’m positive ideally Intel would not be launching low-end merchandise first. We nonetheless have one more wait to see what the massive GPUs have in retailer for us.

With that stated it was good to see some precise specs for Arc GPU dies and SKUs at this level, together with reminiscence configurations, which have lined up comparatively nicely with plenty of what the rumors have been saying. I’m additionally glad to see Intel pushing forward with AV1 {hardware} encoding acceleration, the primary of any vendor to supply that characteristic.

However, I used to be fairly unimpressed to see the dearth of HDMI 2.1 help, and the information that XeSS shall be launching solely with Intel-exclusive XMX help to start with. That mixed with delays for high-end SKUs that push nearer to the launch of next-gen Nvidia and AMD GPUs means there are definitely plenty of hurdles for Intel to beat with this launch. But both means we’ll hopefully have the ability to benchmark Arc discrete graphics quickly.

[ad_2]