[ad_1]

In context: One of the extra intriguing subjects driving evolution within the expertise world is edge computing. After all, how are you going to not get excited a few idea that guarantees to deliver distributed intelligence throughout a mess of interconnected computing assets all working collectively to attain a singular purpose?

Trying to distribute computing duties throughout a number of places after which coordinate these varied efforts right into a cohesive, significant entire is loads more durable than it first seems. This is especially true when making an attempt to scale small proof-of-concept tasks into full-scale manufacturing.

Issues like shifting huge quantities of information from location to location—which, mockingly, was purported to be pointless with edge computing—in addition to overwhelming calls for to label that information are simply two of a number of components which have conspired to make profitable edge computing deployments the exception versus the rule.

IBM’s Research Group has been working to assist overcome a few of these challenges for a number of years now. Recently they’ve begun to see success in industrial environments like car manufacturing by taking a special method to the issue. In explicit, the corporate has been rethinking how information is being analyzed at varied edge places and the way AI fashions are being shared with different websites.

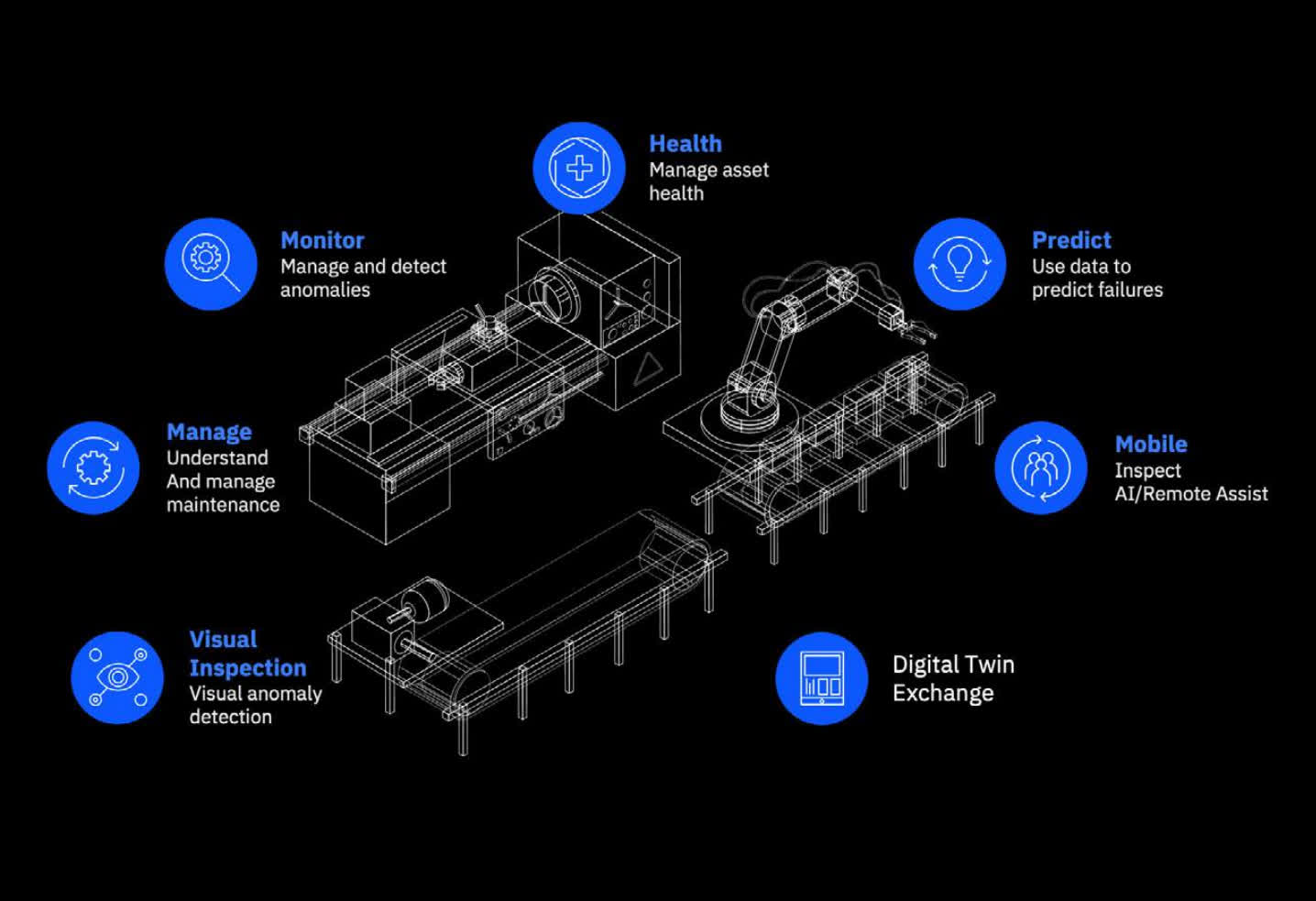

At automobile manufacturing crops, for instance, most firms have began to make use of AI-powered visible inspection fashions that assist spot manufacturing flaws that could be troublesome or too pricey for people to acknowledge. Proper use of instruments like IBM’s Maximo Applications Suite’s Visual Inspection Solution with Zero D (Defects or Downtime), for instance, can each assist save automobile producers important quantities of cash in avoiding defects, and hold the manufacturing traces working as rapidly as attainable. Given the provision chain-driven constraints that many automobile firms have confronted lately, that time has develop into significantly important currently.

The actual trick, nonetheless, is attending to the Zero D facet of the answer as a result of inconsistent outcomes based mostly on wrongly interpreted information can even have the other impact, particularly if that mistaken information finally ends up being promulgated throughout a number of manufacturing websites all through inaccurate AI fashions. To keep away from pricey and pointless manufacturing line shutdowns, it’s important to guarantee that solely the suitable information is getting used to generate the AI fashions and that the fashions themselves are checked for accuracy frequently to be able to keep away from any flaws that wrongly labelled information may create.

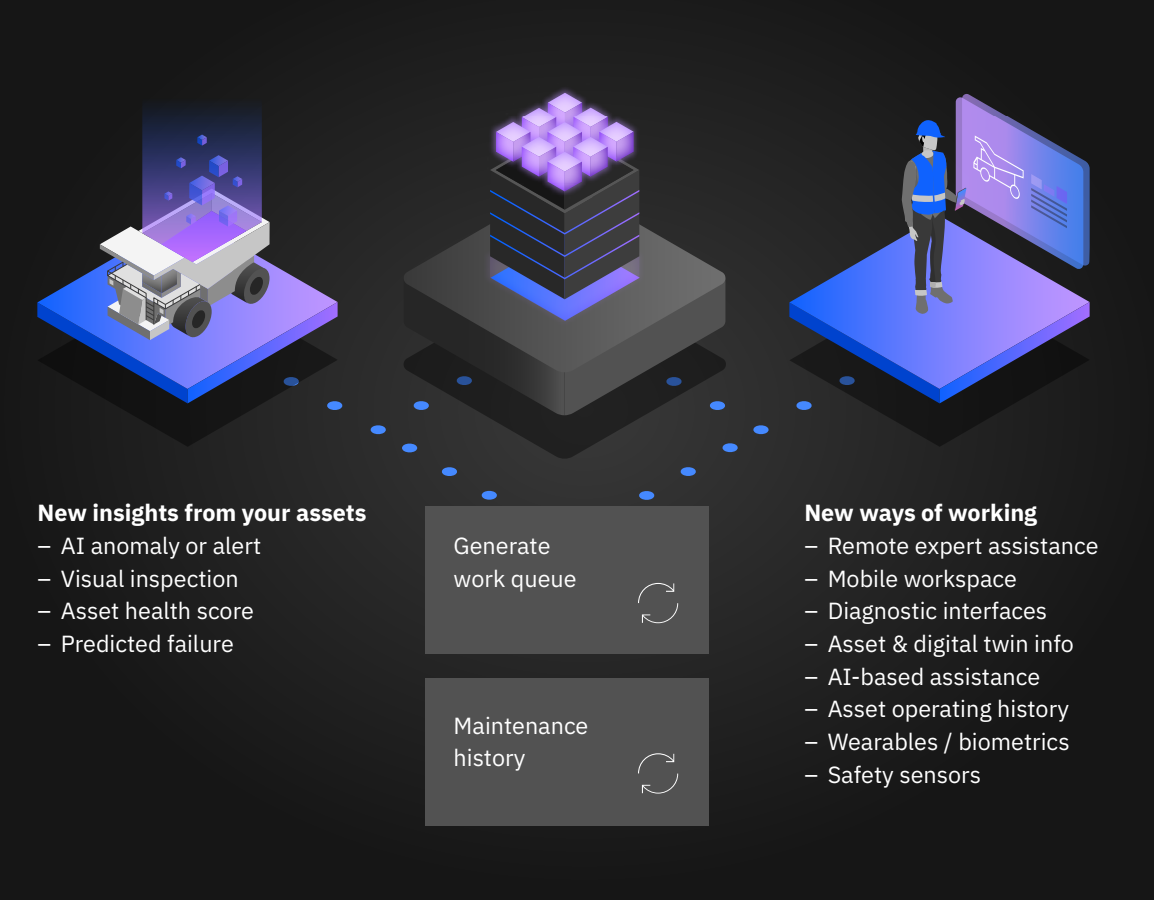

This “recalibration” of the AI fashions is the essence of the key sauce that IBM Research is bringing to producers and specifically a significant US automotive OEM. IBM is engaged on one thing they name Out of Distribution (OOD) Detection algorithms that may assist decide if the info getting used to refine the visible fashions is exterior a suitable vary and may, subsequently, trigger the mannequin to carry out an inaccurate inference on incoming information. Most importantly, it is doing this work on an automatic foundation to keep away from potential slowdowns that might happen from time-consuming human labelling efforts, in addition to allow the work to scale throughout a number of manufacturing websites.

A byproduct of OOD Detection, known as Data Summarization, is the power to pick information for guide inspection, labeling and updating the mannequin. In reality, IBM is engaged on a 10-100x discount within the quantity of information site visitors that at the moment happens with many early edge computing deployments. In addition, this method ends in 10x higher utilization of particular person hours spent on guide inspection and labeling by eliminating redundant information (close to an identical photographs).

In mixture with state-of-the-art methods like OFA (Once For All) mannequin structure exploration, the corporate is hoping to cut back the scale of the fashions by as a lot as 100x as properly. This allows extra environment friendly edge computing deployments. Plus, at the side of automation applied sciences designed to extra simply and precisely distribute these fashions and information units, this permits firms to create AI-powered edge options that may efficiently scale from smaller POCs to full manufacturing deployments.

Efforts just like the one being explored at a significant US automotive OEM are an vital step within the viability of those options for markets like manufacturing. However, IBM additionally sees the chance to use these ideas of refining AI fashions to many different industries as properly, together with telcos, retail, industrial automation and even autonomous driving. The trick is to create options that work throughout the inevitable heterogeneity that happens with edge computing and leverage the distinctive worth that every edge computing website can produce by itself.

As edge computing evolves, it is clear that it isn’t essentially about gathering and analyzing as a lot information as attainable, however fairly discovering the appropriate information and utilizing it as properly as attainable.

Bob O’Donnell is the founder and chief analyst of TECHnalysis Research, LLC a expertise consulting agency that gives strategic consulting and market analysis companies to the expertise trade {and professional} monetary neighborhood. You can comply with him on Twitter @bobodtech.

[ad_2]