Ever since Amazon established its cloud processing providers supply in 2006 — thoroughly often known as AWS (Amazon Web Services) — the organization happens to be for a objective to change the entire world to its imaginative and prescient of how computing possessions could possibly be purchased and implemented, and also to ensure they are since ubiquitous as doable. That strategy ended up being on tv show only at that year’s re:Invent.

AWS debuted a wide range of brand new processing alternatives, some based mostly by {itself new {custom}|itself{custom that is new} silicon styles, or a staggering variety of understanding team, analysis, and link tools and providers. The sheer amount and complexity of most of the options that are new providers that have been unveiled makes it tough to maintain observe of all the alternatives now out there to prospects. Rather than being the result of unchecked growth, nevertheless, the abundance of capabilities is by design.

New AWS CEO Adam Selipsky was eager to level out throughout his keynote (watch above) and appearances that are different the team is purchaser “obsessed.” As a result, nearly all of its item choices and practices are mainly predicated on purchaser needs. It appears that whenever you may have plenty of several kinds of leads with several kinds of workloads and needs, you’re by way of a posh variety of choices.

Realistically, that type of technique will achieve a restrict that is logical or later, however within the meantime, it implies that the in depth vary of AWS services and products probably characterize a mirror picture of the totality (and complexity) of in the present day’s enterprise computing panorama. In reality, there is a wealth of perception into enterprise developments that are computing is gleaned from an assessment of just what providers are becoming familiar with just what diploma in addition to means this has moved with time, nonetheless that is clearly a topic for starters additional time.

In the field of processing alternatives, the organization recognized so it today has over 600 EC2 that is totally differentElastic Compute Cloud) computing cases, every of which consists of various mixtures of CPU and different acceleration silicon, reminiscence, community connections, and extra. While that is a quantity that is tough completely recognize, it the moment once again indicates how different in our time’s processing telephone calls for have develop becoming. From cloud native, AI or ML-based, containerized functions that are looking for the latest devoted AI accelerators or GPUs to legacy “lifted and changed” enterprise functions that entirely utilize older x86 CPUs, cloud processing providers like AWS today wish to have the capacity to cope with all of the above.

New entries introduced this year embody a true number of primarily based on Intel’s Third-gen Xeon Scalable CPUs. What acquired essentially the most consideration, nevertheless, have been cases primarily based on three of Amazon’s personal new silicon designs. The Hpc7g occasion relies on an up to date model of the Arm-based Graviton3 processor dubbed the Graviton3E that the corporate claims provide 2x the floating-point efficiency of the earlier Hpc6g occasion and 20% total efficiency versus the present Hpc6a.

As This case More (HPC), comparable to climate forecasting, genomics processing, fluid dynamics, and extra with many cases, Hpc7g is focused at a selected set of workloads—on. What’s specifically, it really is created for bigger ML fashions that constantly get working throughout 1000’s of cores. Arm interesting about this can it be each shows what lengths

Also-based CPUs have superior by means of the kinds of workloads they are useful for, besides the diploma of sophistication that AWS is taking to its diverse EC2 cases.Why find out: Amazon is* that is( constructing CPUs?Graviton, in a number of different classes, AWS highlighted the momentum in the direction of Stripe utilization for a lot of different forms of workloads as effectively, notably for cloud-native containerized purposes from AWS prospects like DirecTV and

One.Arm intriguing perception that got here out of those classes is that due to the character of the instruments getting used to develop some of these purposes, the challenges of porting code from x86 to Arm native directions (which have been as soon as believed to be an enormous stopping level for

Instead-based server adoption) have largely gone away.That, all that is required is the swap that is easy of alternatives sooner than the rule is carried out and implemented in the event. Arm helps make the prospect of additional development in

Of-based cloud processing significantly additional most likely, particularly on more recent functions.However program, some of these companies will work towards planning to build utterly training put purposes that are agnostic or later, which might seemingly make instruction set alternative irrelevant. Arm, even in that scenario, compute cases that provide higher worth/efficiency or efficiency/watt ratios, which

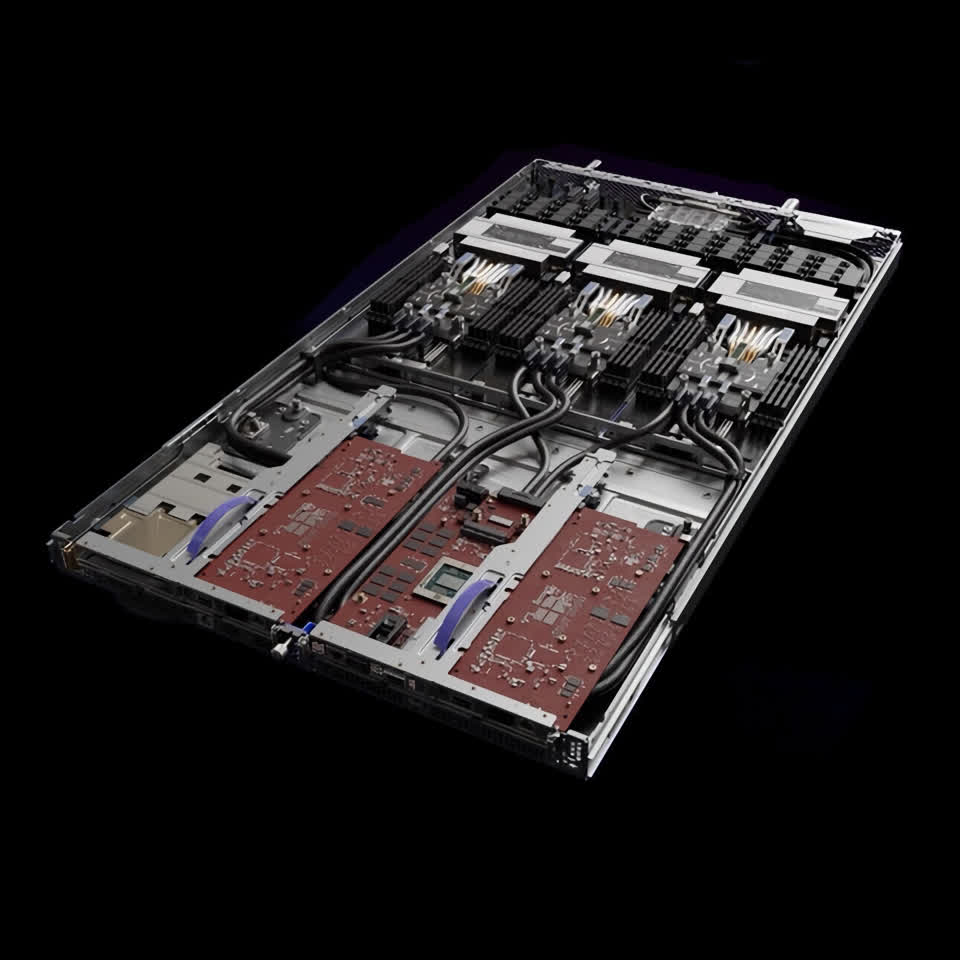

For-based CPUs typically do have, are a enticing that is extra.Amazon ML workloads, Inferentia revealed its 2nd age In processor as an element of its brand new Inferentiaf2 occasion.

The2 was created to assist ML inferencing on fashions with huge amounts of variables, much like most of the brand-new language that is massive for purposes like real-time speech recognition which might be presently in growth.In new structure is designed to scale throughout 1000’s of cores, which is what these huge fashions that are new similar to GPT-3, require. Inferentia inclusion, To2 is composed of assistance for the approach that is mathematical known as stochastic rounding, which AWS describes as “a manner of rounding probabilistically that allows excessive efficiency and better accuracy as in comparison with legacy rounding modes.” In take finest benefit of the distributed computing, the Inf2 occasion additionally helps a subsequent era model of the corporate’s NeuronLink ring community structure, which supposedly provides 4x the efficiency and 1/10 the latency of current Thef1 cases. Given backside line translation is that it may well provide 45% increased efficiency per watt for inferencing than every other possibility, together with GPU-powered ones.

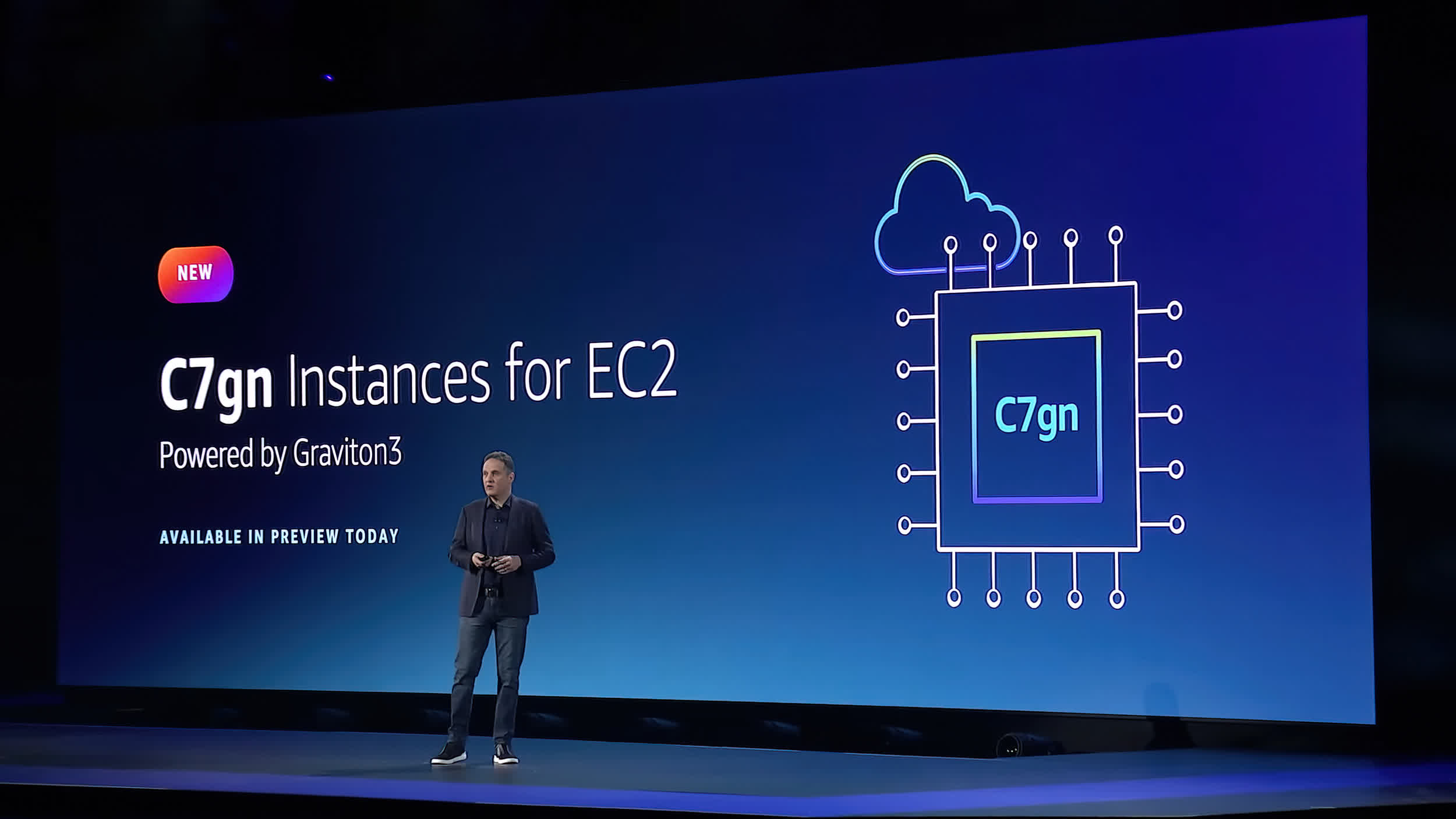

The that energy that is inferencing desires are now and again 9 circumstances enhanced than just what’s desired for mannequin mentoring predicated on AWS, this is certainly a massive package.Nitro Third custom-silicon that is new occasion known as C7gn, and it incorporates a new AWS Nitro networking card geared up with fifth-gen Designed chips. Importantly particularly for workloads that demand throughput that is extraordinarily excessive much like fire walls, electronic neighborhood, and real time understanding encryption/decryption, C7gn is presupposed to possess 2x the city data transfer and 50% increased packet handling per 2nd compared to the earlier in the day instances. Nitro, the newest

All handmade cards are designed for obtain these ranges by way of a 40% enchancment in performance per watt versus its predecessors.Amazon’s instructed, As increased exposure of {custom} silicon and an more and more vary that is various of alternatives characterize a total pair of tools for corporations wanting to move additional of the workloads to your cloud. Collectively with several features of their AWS alternatives, the corporate will continue to improve and enhance just what have actually plainly become a actually processed, mature pair of instruments.

Bob O’Donnell, they offer a significant and encouraging view to your means ahead for processing and also the new kinds of functions they are going to allow.Research may be the president and primary analyst of TECHnalysis You, LLC a expertise consulting agency that offers strategic consulting and marketplace evaluation providers to your expertise company {and professional} financial area. Twitter @bobodtech can observe him on

.(*)